Rustic Retreat

Hot Projects

Live broadcasts and documentation from a remote tech outpost in rustic Portugal. Sharing off-grid life, the necessary research & development and the pursuit of life, without centralized infrastructure.

Subscribe to our new main project Rustic Retreat on the projects own website.

Subscribe to our new main project Rustic Retreat on the projects own website.

In order to have total system control for crew/guests and to be more transparent about the technology and infrastructure in use and to give people another way to get into the detailed aspects and challenges of this project, the Virtual Flight Control Center (VFCC) is now open to the public. This is just a first step, as more technology is implemented, the VFCC will have more features. It's a little bit early in the projects timeline but became necessary as a proving ground for the UCSSPM.

If you want to see more than just screenshots open the Virtual Flight Control Center (VFCC).

Be aware that we do not care about testing/supporting closed-source, IP encumbered browsers at all. Also you'll probably need at least 7“ of display size since it doesn't automatically resize yet and grafana's graph rendering has a tendency to overload mobile browsers, so phones are currently not the best choice to play with it :) For obvious reasons, the public VFCC doesn't allow control and data access is read-only, so you can play with it as much as you like without having to worry about breaking anything.

It's still a very crude and hackish demonstrator, combining the following open-source components:

Due to system maintenance on the host machine of one of our VMs there will be a service outage on most of our communication infrastructure. Currently IRC, XMPP, STUN/TURN are offline and will resume service as soon as the host is back up. Webservices, Cloudmap, git, VFCC and mailing-lists are undisturbed.

When you're at this year's Chaos Communication Congress (31C3) and interested in RF/satellite hacking, you should make sure to find your way to Saal 1 on Day 2 at 16:45 (localtime) to enjoy Sec/schneider's talk about Iridium Pager Hacking.

After seeing xfce-planet in action @MUCCC, Sec used it to create this awesome timelapse video for more presentation eye-candy, showing all Iridium satellite positions and orbits. Very nice idea indeed!

Today, another pull-request flew in, enhancing the picoreflow software stack with MAX6675 compatibility. Git and github are great tools for open collaboration but it takes some time to get used to them. Of course, the github auto-merge feature is great and works pretty reliable, but I still prefer to review and test PR's locally on the commandline, before actually merging them.

Github also offers some command line foo for this, as you can see in the screenshot above but I'd like to use this opportunity to share another way how to test, handle and merge github pull requests even more easily and comfortably, in case you're new to git/github and don't know about this yet in a real-world, step-by-step example.

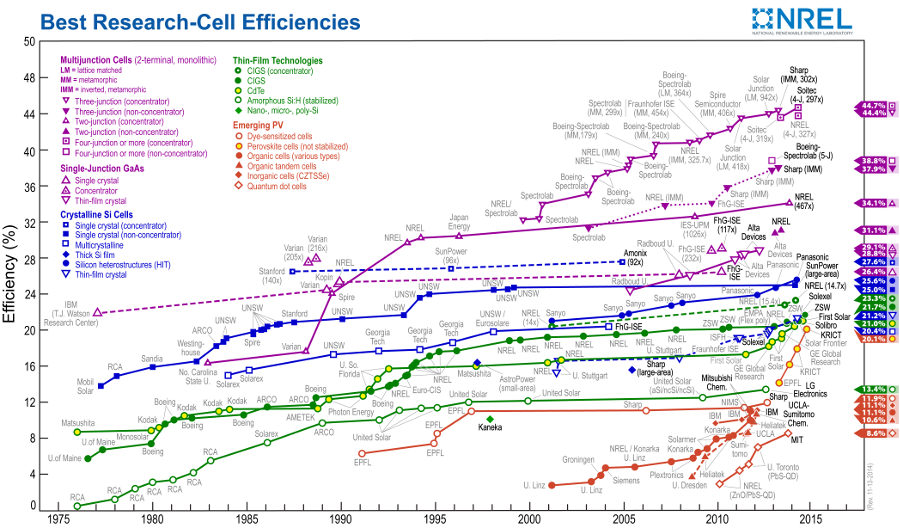

There are often reports about breakthroughs in alternative technologies and when I look, I can find charts claiming that in 2014/2015 top solar conversion efficiency is reported to be at about 45%. However, when I look harder I don't seem to find obtainable Panels above 21%. The highest grade I could get were the Mobile Technology MT-ST110 panels on the odyssey. I can't help but to ask myself:

Now, a couple of researchers with interdisciplinary and seemingly non-related backgrounds published the concept to apply a quasi-random nano-structure to a solar (PV) cell in order to increase the absorption of photons (decrease reflection) and reported an overall broadband absorption enhancement of that solar cell to be 21.8%, when a Blu-Ray land/pit pattern is applied.

From the little info publicly available, it seems they've just tried different materials for this approach and discovered that Blu-Ray's compression algorithm (tested with Jackie Chan - Police Story 3: Supercop) creates a favorable quasi-random array of lands and pits (0s and 1s) with feature sizes between 150 and 525 nm, which seems to work quite well for light-trapping (photon management) applications over the entire solar spectrum.

http://www.mccormick.northwestern.edu/news/articles/2014/11/ http://www.extremetech.com/extreme/194938

IMHO, it should be easy to use a real random number generator as a source, create some land/pit patterns following Blu-Ray specs (physical), run some tests and apply that to the production process. If it works, the sudden increase of convertible sustainable energy would be more than just considerable.

Let's come back here in the future and measure how long it took to get into a real product. Especially in constrained off-grid systems like Apollo-NG, with such little usable surface area, every 1% of efficiency counts for a lot.

I know that we usually fly over webpages just scanning text for keywords and structural bits and pieces of information, with the least amount of attention we can spare. We often don't really read anymore. But today I would like you to slow down, take a moment, get your favorite beverage and sit down to actually read this, because a part of my current work presented a perfect opportunity to go into learning, knowledge transfer, inspiration and of course the misconception of originality as well.

When I was a small boy, I was often asked by grown-up's what I wanted to be when I grew up. I always answered: I will study cybernetics. The kicker is, I just said that because I knew it would please my mother, so she could enjoy showing off, what a smart and ambitious son she had. But as it turned out, I obviously wasn't smart enough for that :)

For the past couple of weeks I've been scrambling like hell and to get some of the work done I had to create an army of slaves to do it first. So I've been busy building robots of all different kinds. Some of them are made of real hardware. Others exist in software only and one of those creations shall be used as an example, to reflect the learning process involved.

When I look at today's ways of “learning” in school and universities I am not surprised that we are breeding generations of mindfucked zombies, endlessly repeating the same mistakes, trying to use the one tool they've learned for everything (even if completely incompatible). Pointless discussions about originality, plagiarism and unique revolutionary ideas. Patents. Intellectual-Property. Bullshit. Many people with that kind of background I meet cannot even say “I don't know”. They will scramble and come up with a bullshit answer for everything, only to appear knowing. Why? Because they have been taught that not knowing something is equal to failure. And failure will lead to become an unsuccessful looser, who will not get laid, right?

But how should anyone's brain be able to really learn something when it believes (even when just pretending) that it already knows it? You cannot fill a cup that already believes itself to be full. When you pretend to be an expert, you obviously cannot even ask questions that would help you to really understand something, because then your “expert status” would fade away. So better make your heads empty, because the more you learn, the more you realize, that you don't know shit.

Which turns our focus to failure. From all I could learn about efficient learning, failure was always the biggest accelerator for learning. If everything went smoothly and I didn't have to do much to learn/realize how or why something worked I didn't learn anything about it, because I simply didn't have to. Only failure and deep engagement with whatever I tackled really let my brain comprehend things to a level where I can say I've learned more about it.

This is a little drawing I made modeling how my personal learning process works and after looking at a lot of history, it seems to me that we can apply it over all ages and societies as well. Only that we've managed to carry cultural ballast with us, which tries to pretend that the right half of the circle doesn't exist or when not denied is always associated with negative educational/social metrics/values (grades/recognition).

Before we had Internet, it was easy to travel somewhere, copy what other people did, come back and pretend it's one's own “original” work (simply lie about it). No one could really check it. Especially not on individual mass scale. It was easy to sell the illusion of revolutionary and “original” work. But then, why are so many “original” pop-songs (not the countless covers of these songs anyways) basically based on the melodies of countless local folk songs from all ages from all over the world? Or why were the Americans so eager to pull off Operation Paperclip after WW2? Why has there been and is so much industrial/military espionage to get the secret plans of “the other guys” if they were all so original? Well they weren't, because…

The ucsspm is an open-source clear-sky prediction model, incorporating math algorithms based on latest research by the Environmental and Water Resources Institute of the American Society of Civil Engineers and a few veteran but still valid and publicly available NOAA/NASA computations. It has been around for a while but received a major revision, code refactoring and got bumped to primary project status, since it's becoming an essential tool in predicting Apollo-NG deployment location feasibility.

A long-term test setup collecting simulation and reference measurement data for the odyssey and aquarius has been set up and will be published soon as well. The first results look quite promising - it's definitely on the right track to become a unified, reliable and open clear-sky global solar radiation prediction model. We are looking forward for you, to use, review, verify and enhance it as well.

The aquarius needs a galley in order to prepare and cook food but due to unforeseen personal circumstances I had to invest into this infrastructure way before than it was actually necessary - since the base trailer for the LM isn't available yet. So I've started to build a prototype kitchen with all that is needed for functional and fun food hacking. One of the primary energy carriers selected for cooking is gas. That can be either LPG (Propane/Butane) or Methane (delivered by utility gas lines).

Since gases can be tricky and risky energy carriers and their combustion process also creates potentially harmful by-products like Carbon-Monoxide (CO), it seemed prudent to have an autonomous environmental monitoring and gas leakage detection system, in order to minimize the risk of an undetected leak, which could lead to potentially harmful explosions or a high concentration of CO, which could also lead to unconsciousness and death. Monitoring the temperature and humidity might also help in preventing moisture buildup which often leads to fungi problems.

To cover everything, a whole team of sensors monitors specific environmental targets and their data will then be fused to form a basis for air quality analysis and threat management to either proactively start to vent air or send out warnings via mail/audio/visual.

| Sensor | Target | Description | Placement |

|---|---|---|---|

| MQ7 | CO | Carbon-monoxide (Combustion product) | Top/Ceiling |

| MQ4 | CH4 | Methane (Natural Gas) | Top/Ceiling |

| MQ2 | C3H8 | Propane (Camping Gas Mix) | Bottom/Floor |

| MQ2 | C4H10 | Butane (Camping Gas Mix) | Bottom/Floor |

| SHT71 | Temp/Humidity | Room/Air Temperature & Humidity Monitoring | Top |

As a platform for this project a spark-core was selected, since it's a low power device with wireless network connectivity, which has to be always on, to justify its existence. The Spark-Core docs claim 50mA typical current consumption but it clocked in here with 140mA (Tinker Firmware - avg(24h)). After setting up a local spark cloud and claiming the first core it was time to tinker with it.

The default firmware (called tinker) already get's you started quickly with no fuss: You can read and control all digital and analog in- and outputs. With just a quick GNUPlot/watch hack I could monitor what a MQ2 sensor detects over the period of one evening, without even having to hack on the firmware code itself (fast bootstrapping to get a first prototype/concept).

In order to learn more about GNU Radio and HackRF, so that tackling more complex scenarios like darc-side-of-munich-hunting-fm-broadcasts-for-bus-and-tram-display-information-on-90-mhz become easier, it was time to go for a much simpler training target:

Those cheap RF controlled wall plugs you can use to remote control the power outlets. Now, I'm not talking about FS20, x10 or HomeMatic devices but the really cheap ones you can usually buy in sets of three combined with a handheld remote controller for 10-20 EUR.

The goal here is to find out, how long it actually takes to reverse engineer this particular (at least 10 year old) system and achieve full remote control capability using HackRF and other open-source tools.

It took quite a bit of tinkering and a couple of clarifying sessions on IRC (Thanks to lbt and aholler for their input and support), to deploy the local Spark-Cloud test setup and interpret/abstract the scattered docs into one whole system view model. But why go through all this hassle, when you can just comfortably use the “official” spark.io cloud service to develop & manage your cores instantly?

Well, the IoT (Internet of Things) is a hip buzzword these days and the Spark-Cores definitely can be categorized as the first generation of open, relatively cheap and hackable wireless IoT devices.

For all we know, it is at least save to assume, that we actually have no way of knowing how far this technology branch is going to develop and spread in a couple of years, just like the Internet itself 20 years ago. We should look at the privacy aspects before it's actually too late to do so. In the end, it boils down to this question:

Do we really want to give out our complete sensory data (sys/env/biometrics) over all time and possibly full remote control over all the actors, built into everything, at all time, at the place we like to call our home?

Some people may haven't yet realized that we've got plenty of open-source tools to store, analyze, link and visualize billions of data rows quickly and with much ease. Imagine what people with a multi-billion budget are able to employ. To give you a small scale example, how transparent anyone's little life and habits become, I've created a dashboard which doesn't show many metrics yet (more are in the process) but it's more than enough, if you learn how to interpret the graphs. The data you see there is mostly generated by two spark-cores which are deployed here. Big/Open-Data/Cloud technology is not the problem itself, it's our culture/society, which obviously isn't ready for it.

In the year 2014, in a post Olympic Games (Stuxnet) & Nitro Zeus, Snowden & Lavabit era, we have no other choice but to come out of our state of denial and simply accept the fact, that every commercial entity can be compromised through multiple legal, administrative, monetary, social/personal or technological levers. Access- and Cloud-Provider are no exception. As repeatedly shown, all of them can be tricked, coerced or forced to “assist” in one way or another. No matter what anyone promises, from this point on, they all have to be considered compromised.

The current software implementation (firmware- and server-side) has no concept of mesh/p2p or direct networking/communication. All Spark-Cores need a centralized spark-server for Control & Communication. Also, the Spark.connect() routine unfortunately has no timeout (yet?), the Core might hang indefinitely, which could be a big problem, even if your particular code doesn't require to connect to the cloud because after you call Spark.connect(), your loop will not be called again until the Core finishes connecting to the Cloud. This might happen if your WiFI or internet access is offline.

In this picture the blue lines represent the data flow of the Cores, the clients and the central server. All points marked with a red C show where the current implementation/infrastructure is to be considered compromised and the yellow P marks potential security risks (since the firmware isn't compiled locally), theoretically anything can be injected into the firmware, either in the AWS cloud or even in-stream. Tests showed that the API webservers don't offer perfect forward secrecy, the cores itself use only 128-CBC without DH support, which offers no forward secrecy at all. Not having reliable crypto and passing everything through compromised infrastructure can't be the way to go. Not to mention, the additional amount of required bandwidth this concept ultimately creates, when you consider the available IPv6 address space and a fair likelihood, that not so far off, there will be more IoT clients connected to the Internet, than there are humans.