Rustic Retreat

Hot Projects

Live broadcasts and documentation from a remote tech outpost in rustic Portugal. Sharing off-grid life, the necessary research & development and the pursuit of life, without centralized infrastructure.

Subscribe to our new main project Rustic Retreat on the projects own website.

Subscribe to our new main project Rustic Retreat on the projects own website.

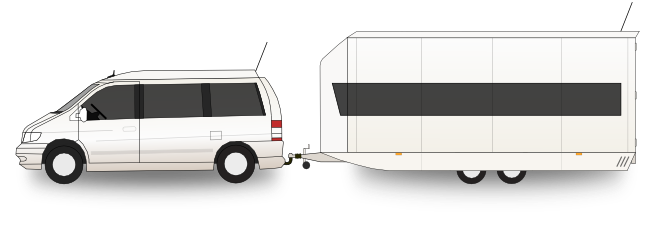

Apollo-NG is a mobile, self-sustainable, independent and highly-experimental Hackbase, focused on research, development and usage of next-generation open technology while visiting places without a resident, local Hackerspace and offering other Hackers the opportunity to work together on exciting projects and to share fun, food, tools & resources, knowledge, experience and inspiration.

It's been quite a while since anything was moving here because I got sick and was down for more than 3 weeks and directly after that it was time to begin the move out of the flat that I occupied for the last decade in order to clean out the rest of all the stuff I've gathered over the years and which doesn't belong onto the Apollo-NG cargo manifest. This will be the last stepping stone to finally be able to sustain myself on the road with what I have on board.

Unfortunately, this pushed the PiGI crowdfunding deadline further because I didn't want to start the campaign in a state where everything that I need to successfully launch and fulfill the campaign is packed in boxes. That's too much of a handicap for now and would increase the risk of further delays. Iggy and I will start the campaign as soon as we are in a somewhat operational state again.

In the last couple of days there hasn't been much change in the numbers of the market analysis. Based on the latest numbers, it would make sense to produce a volume of 100 PiGI's as a mini series which will be the basis of the coming crowdfunding campaign.

Unfortunately, the crowdfunding portals don't support a scaling system to automatically manage the per unit price based on pledging participants and the decrease of the price with an increasing number of units. It would have been quite a difference with 1000 units, when you compare the price scaling.

If we set the price too low because we hope for more people in the campaign, we might end up paying on top since we didn't reach the numbers necessary to break even. If we set the price too high in order to mitigate that risk and way more people pledge than originally indicated here, the units will cost the end user much more than to produce them. Apollo-NG is a non-profit project and if we would start to make a hefty profit by selling them for more than it actually cost to produce it would be no good. So what are your thoughts regarding this issue?

I like to use the term OVEP (Open Virtual Enterprise Planning) to describe a method and tools to change how people produce stuff. No more secrets, no hidden agendas/plans, no bullshit and especially no extra costs for marketing/sales/legal/boss-boni which makes everything artificially expensive. Every aspect of the enterprise to develop & produce the product is public and transparent in the open. The PiGI pages are basically the business plan for this single product, so everyone can understand how it is build and what costs are really involved.

The campaign will probably be launched within the next 10-14 days to progress while the upcoming DSpace Hackathon in Graz during the second half of August is underway. So stay tuned, subscribe to the RSS-Feed and join the announce mailinglist to get notified when the campaign goes public.

If you're using Elasticsearch you'll sooner or later have to deal with diskspace issues. The setup I currently have to manage gathers 200 to 300 million docs per 24 hours and a solution was needed to always guarantee enough free diskspace so that Elasticsearch wouldn't fail.

The following bash script is the last incarnation of this quest to have an automatic “ringbuffer”:

#!/bin/bash

LOCKFILE=/var/run/egc.lock

# Check if Lockfile exists

if [ -e ${LOCKFILE} ] && kill -0 `cat ${LOCKFILE}`; then

echo "EGC process already running"

exit 1

fi

# Make sure the Lockfile is removed

# when we exit and then claim it

trap "rm -f ${LOCKFILE}; exit" INT TERM EXIT

# Create Lockfile

echo $$ > ${LOCKFILE}

# Always keep a minimum of 30GB free in logdata

# by sacrificing oldest index (ringbuffer)

DF=$(/bin/df /dev/md0 | sed '1d' | awk '{print $4}')

if [ ${DF} -le 30000000 ]; then

INDEX=$(/bin/ls -1td /logdata/dntx-es/nodes/0/indices/logstash-* | tail -1 | xargs -n 1 basename)

curl -XDELETE "http://localhost:9200/${INDEX}"

fi

# Check & clean elasticsearch logs

# if disk usage is > 10GB

DU=$(/usr/bin/du /var/log/elasticsearch/ | awk '{print $1}')

if [ ${DU} -ge 10000000 ]; then

rm /var/log/elasticsearch/elasticsearch.log.20*

fi

# Remove Lockfile

rm -f ${LOCKFILE}

exit 0

Make sure to check/modify the script to reflect your particular setup: It's very likely that your paths and device names are different.

It runs every 10 minutes (as a cron job) and checks the available space on the device where Elasticsearch stores its indices. In this example /dev/md0 is mounted on /logdata. If md0 has less than 30GB of free diskspace it automagically finds the oldest Elasticsearch index and drops it via Elasticsearch's REST API without service interruption (no stop/restart of Elasticsearch required).

A simple locking mechanism will prevent multiple running instances in case of timing issues. All you need is curl for it to work and it will increase your storage efficiency so that you can always have as much past data available as your storage allows without the risk of full disk issues or the hassle of manual monitoring & maintaining.